概述 本文介绍如何在 Harvester 虚拟机上启用 GPU 支持,并部署一个 基于 RKE2 的 Kubernetes 集群,使集群能够识别和使用 GPU 资源。

先决条件

Env

Version

Harvester

v1.4.0

OS

Ubuntu 22.04.5 LTS

kernel

5.15.0-140-generic

GPU

NVIDIA GT 710

Docker

27.4.1

Rancher

V2.9.2

RKE2

v1.28.15+rke2r1

Harvester GPU 透传到虚拟机

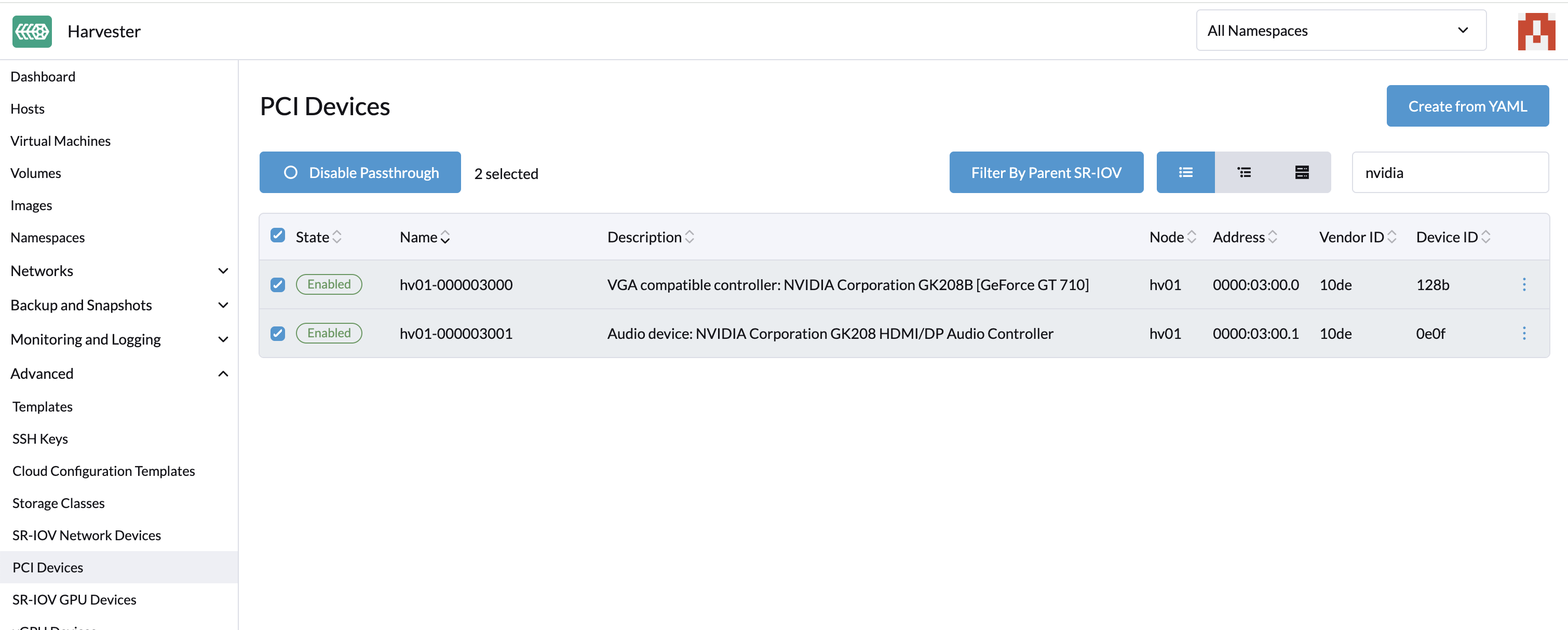

在 PCI Devices 页面下, 搜索 nvidia,可以看到有两个设备,将这两个设备都设置 Passthrough 。

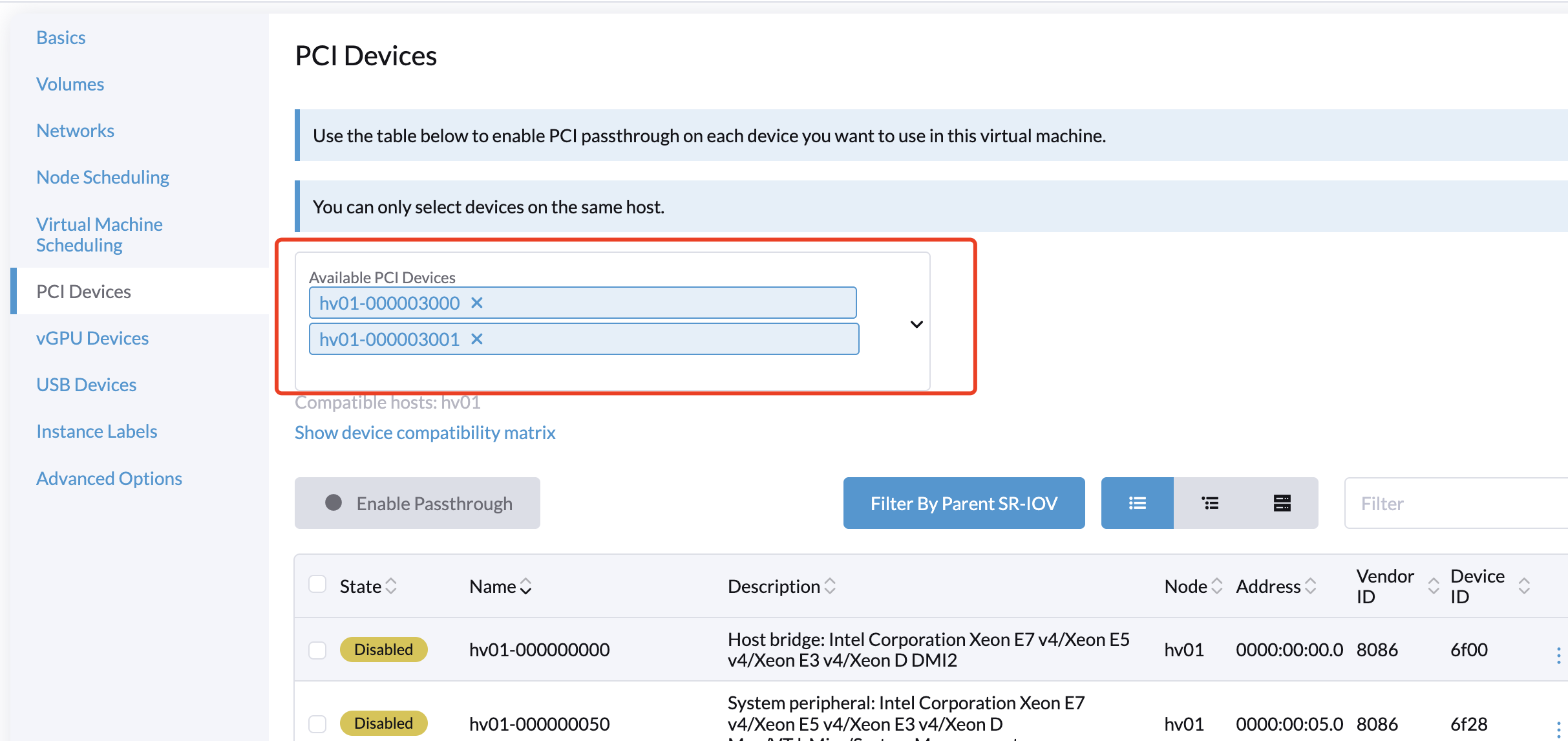

创建虚拟机,在 PCI Devices 页面下,选择刚刚直通的两个 NVIDIA 设备。

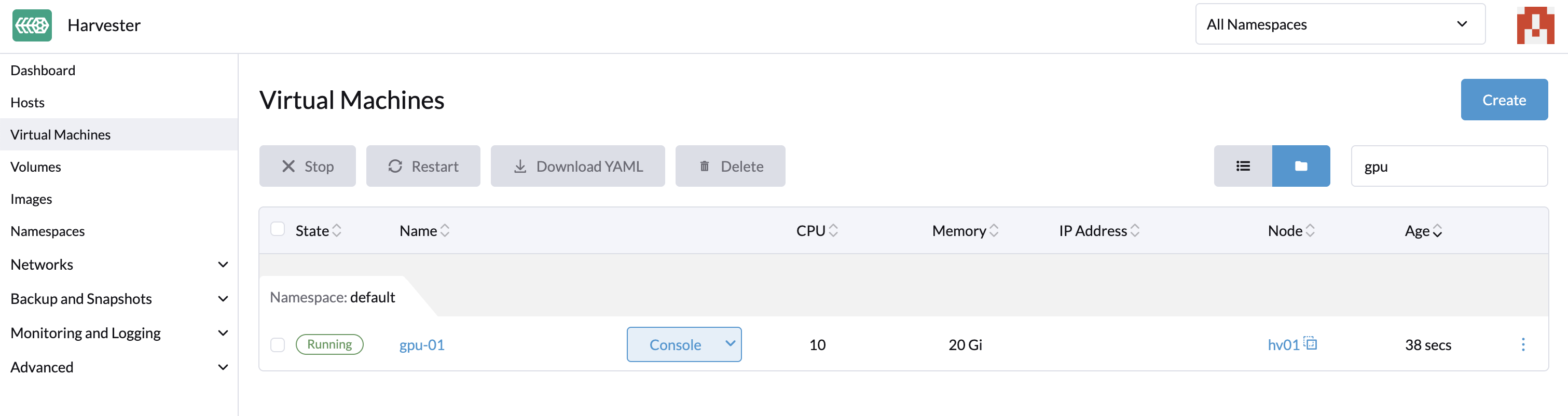

等待虚拟机创建成功

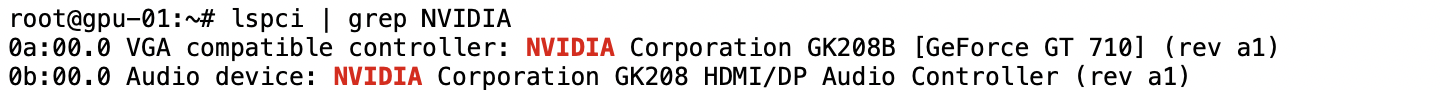

检查 GPU 设备是否有透传进来

1 2 3 0a:00.0 VGA compatible controller: NVIDIA Corporation GK208B [GeForce GT 710] (rev a1) 0b:00.0 Audio device: NVIDIA Corporation GK208 HDMI/DP Audio Controller (rev a1)

安装 NVIDIA 驱动

安装 NVIDIA 驱动,驱动安装的版本建议上官网查看对应的显卡适合安装什么版本,如果安装版本过高,可能会导致运行出现问题。针对 gt710 选择 470 版本

1 2 apt update apt install nvidia-utils-470 nvidia-headless-470 nvidia-driver-470

检查驱动是否安装成功

1 2 GPU 0: NVIDIA GeForce GT 710 (UUID: GPU-62b0c46d-488e-6257-7419-c147d83ee409)

安装 Docker 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 国内源 sudo cp /etc/apt/sources.list /etc/apt/sources.list.bak cat > /etc/apt/sources.list << EOF deb http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse EOF sudo curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" sudo apt-get -y update sudo apt-get -y install docker-ce=${install_version} docker-ce-cli=${install_version} --allow-downgrades; systemctl start docker systemctl enable docker sudo apt-mark hold docker-ce docker-ce-cli

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 国外源 sudo apt-get remove docker docker-engine docker.io containerd runc sudo apt-get install apt-transport-https ca-certificates curl gnupg-agent software-properties-common ifupdown -y curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" sudo apt-get update sudo apt-get install docker-ce docker-ce-cli containerd.io -y systemctl start docker systemctl enable docker sudo apt-mark hold docker-ce docker-ce-cli apt-cache madison docker-ce sudo apt-get install docker-ce=<VERSION_STRING> docker-ce-cli=<VERSION_STRING> containerd.io

配置 NVIDIA Runtime

安装nvidia-container-toolkit

参考链接:https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

1 2 3 4 curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg && curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list sed -i -e '/experimental/ s/^#//g' /etc/apt/sources.list.d/nvidia-container-toolkit.list apt-get update apt-get install -y nvidia-container-toolkit

配置 Docker 使用 nvidia runtime

1 nvidia-ctk runtime configure --runtime=docker

1 2 3 INFO[0000] Config file does not exist; using empty config INFO[0000] Wrote updated config to /etc/docker/daemon.json INFO[0000] It is recommended that docker daemon be restarted.

确认配置是否写入成功。

1 2 3 4 5 6 7 8 9 cat /etc/docker/daemon.json { "runtimes" : { "nvidia" : { "args" : [], "path" : "nvidia-container-runtime" } } }

重启 Docker

1 systemctl restart docker

验证

方法 1:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 docker run --rm --runtime=nvidia --gpus all ubuntu nvidia-smi Unable to find image 'ubuntu:latest' locally latest: Pulling from library/ubuntu d9d352c11bbd: Pull complete Digest: sha256:b59d21599a2b151e23eea5f6602f4af4d7d31c4e236d22bf0b62b86d2e386b8f Status: Downloaded newer image for ubuntu:latest Thu Dec 12 19:02:45 2024 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 470.256.02 Driver Version: 470.256.02 CUDA Version: 11.4 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 NVIDIA GeForce ... Off | 00000000:0A:00.0 N/A | N/A | | 40% 40C P0 N/A / N/A | 0MiB / 981MiB | N/A Default | | | | N/A | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

方法 2:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 docker run --gpus all --rm --runtime=nvidia nvidia/cuda:11.4.3-runtime-ubuntu20.04 nvidia-smi Unable to find image 'nvidia/cuda:11.4.3-runtime-ubuntu20.04' locally 11.4.3-runtime-ubuntu20.04: Pulling from nvidia/cuda 96d54c3075c9: Pull complete 1d8f82780678: Pull complete fb7423b4aec8: Pull complete f8ae240d6263: Pull complete 81bb5702a96f: Pull complete eaab28ffdfa4: Pull complete 7795b051ada1: Pull complete ae6c0f99e656: Pull complete 4c93aa93344d: Pull complete Digest: sha256:3beb33b21bcea4e78399c37574eba400fe85b5f48a7188eec7208d9a9c459219 Status: Downloaded newer image for nvidia/cuda:11.4.3-runtime-ubuntu20.04 ========== == CUDA == ========== CUDA Version 11.4.3 Container image Copyright (c) 2016-2023, NVIDIA CORPORATION & AFFILIATES. All rights reserved. This container image and its contents are governed by the NVIDIA Deep Learning Container License. By pulling and using the container, you accept the terms and conditions of this license: https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license A copy of this license is made available in this container at /NGC-DL-CONTAINER-LICENSE for your convenience. Thu Dec 24 19:05:13 2025 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 470.256.02 Driver Version: 470.256.02 CUDA Version: 11.4 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 NVIDIA GeForce ... Off | 00000000:0A:00.0 N/A | N/A | | 40% 41C P0 N/A / N/A | 0MiB / 981MiB | N/A Default | | | | N/A | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

到此已验证容器内能正常调用 NVIDIA 显卡。

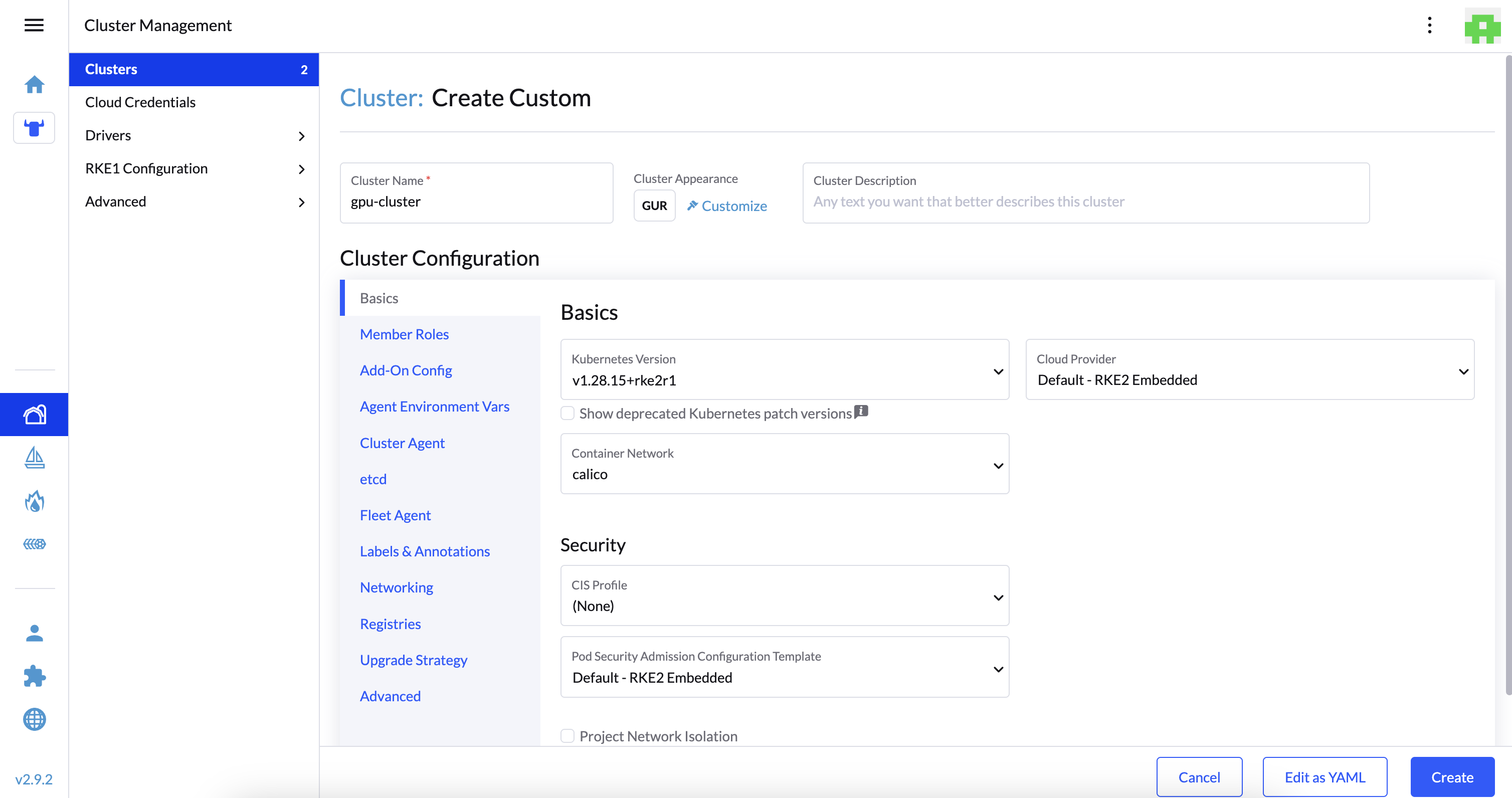

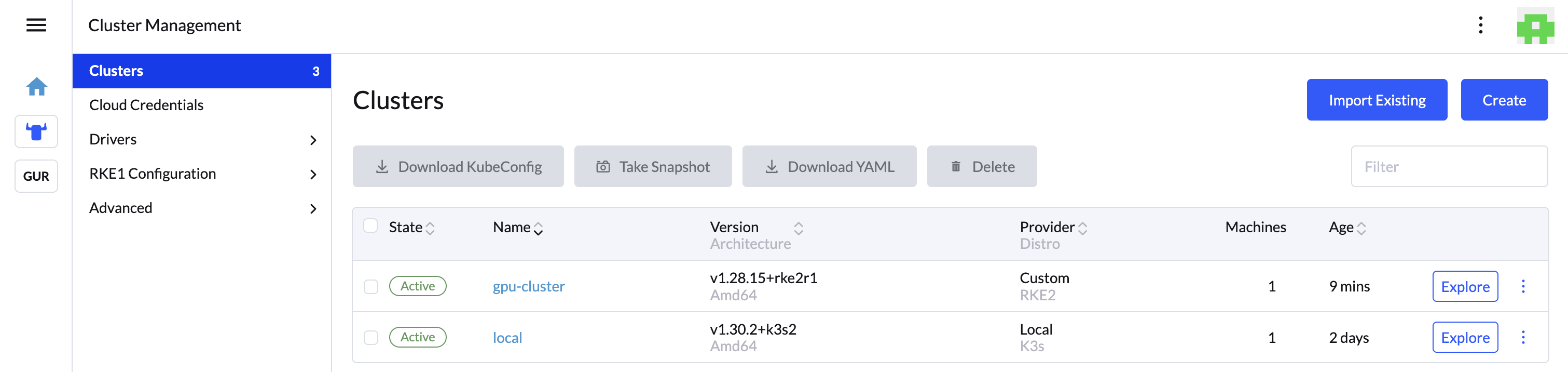

安装 RKE2 集群 这里直接使用 Rancher 快速安装 RKE2 集群

等待集群自动安装完成

安装 NVIDIA GPU Operator NVIDIA GPU Operator 是一款 Kubernetes Operator,可简化 Kubernetes 集群中 NVIDIA GPU 资源的管理和部署。它可以自动配置和监控 NVIDIA GPU 驱动程序以及相关组件,例如 CUDA、容器运行时和其他 GPU 相关软件。

添加 nvidia gpu operator 的 repo

1 2 3 helm repo add nvidia https://helm.ngc.nvidia.com/nvidia helm repo update

1 2 3 4 5 6 7 8 9 10 11 12 13 cat > nvidia-values.yaml << EOF toolkit: enable: true env: - name: CONTAINERD_CONFIG value: /var/lib/rancher/rke2/agent/etc/containerd/config.toml.tmpl - name: CONTAINERD_SOCKET value: /run/k3s/containerd/containerd.sock - name: CONTAINERD_RUNTIME_CLASS value: nvidia - name: CONTAINERD_SET_AS_DEFAULT value: "true" EOF

参数说明:

CONTAINERD_CONFIG:指定 containerd 配置文件路径,默认是 /etc/containerd/config.toml,这里需要修改成 RKE2 的 containerd 路径。CONTAINERD_SOCKET:指定 containerd socket 的路径,默认是 /run/containerd/containerd.sock,这里需要修改成 RKE2 的 containerd socket 路径。CONTAINERD_RUNTIME_CLASS:运行时类的名称,默认值是 nvidia。CONTAINERD_SET_AS_DEFAULT:是否设置为默认的运行时。

执行 helm 安装 gpu-operator

1 helm install gpu-operator nvidia/gpu-operator -n gpu-operator --create-namespace --set driver.enabled=false -f nvidia-values.yaml

检查状态

1 2 3 4 5 6 7 8 9 10 11 12 NAME READY STATUS RESTARTS AGE gpu-feature-discovery-gnts9 1/1 Running 0 2m26s gpu-operator-65b66775b8-nd9v5 1/1 Running 0 2m56s gpu-operator-node-feature-discovery-gc-cb97889d5-5tcrf 1/1 Running 0 2m56s gpu-operator-node-feature-discovery-master-7fbc47d656-plvsz 1/1 Running 0 2m56s gpu-operator-node-feature-discovery-worker-2vncg 1/1 Running 0 2m57s nvidia-container-toolkit-daemonset-hhfk5 1/1 Running 0 2m27s nvidia-cuda-validator-v6srp 0/1 Completed 0 2m4s nvidia-dcgm-exporter-xwxkf 1/1 Running 0 2m27s nvidia-device-plugin-daemonset-dbbb7 1/1 Running 0 2m27s nvidia-operator-validator-mn52r 1/1 Running 0 2m27s

如果安装出现如下错误,可以尝试使用这个地址:https://nvidia.github.io/gpu-operator

参考:https://github.com/NVIDIA/gpu-operator/issues/13#issuecomment-2161607217

1 2 Error: looks like "https://helm.ngc.nvidia.com/nvidia" is not a valid chart repository or cannot be reached: failed to fetch https://helm.ngc.nvidia.com/nvidia/index.yaml : 403 Forbidden

如果拉镜像出现 403

1 Failed to pull image "nvcr.io/nvidia/gpu-operator:v24.9.2" : failed to pull and unpack image "nvcr.io/nvidia/gpu-operator:v24.9.2" : failed to resolve reference "nvcr.io/nvidia/gpu-operator:v24.9.2" : unexpected status from HEAD request to https://nvcr.io/v2/nvidia/gpu-operator/manifests/v24.9.2: 403 Forbidden

这个跟网络区域有关系… 尝试用其他区域试试。

创建 deployment 验证 GPU 是否可用 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 apiVersion: apps/v1 kind: Deployment metadata: labels: app: gpu-test name: gpu-test namespace: default spec: replicas: 1 selector: matchLabels: app: gpu-test strategy: type: Recreate template: metadata: labels: app: gpu-test namespace: default spec: containers: - image: nvidia/cuda:11.4.3-runtime-ubuntu20.04 imagePullPolicy: IfNotPresent name: gpu-test resources: limits: nvidia.com/gpu: '1' requests: nvidia.com/gpu: '1' stdin: true tty: true runtimeClassName: nvidia

进入 pod 中查看是否能够执行 nvidia-smi 命令

1 kubectl exec -it gpu-test-66bcf7c785-p82km -- nvidia-smi -L

如果正常输出显卡型号,则说明 GPU Operator 部署成功。

1 GPU 0: NVIDIA GeForce GT 710 (UUID: GPU-62b0c46d-488e-6257-7419-c147d83ee409)

TroubleShooting 如果创建 GPU 的 pod 有问题,有可能是 containerd 无法正确读取 config.toml 的配置。为了排除这种可能,可以先验证一下 containerd 是否能够使用 NVIDIA 的 runtime。可以通过这个命令手动创建一个 containerd 的容器看一下是否能够使用 GPU:

1 /var/lib/rancher/rke2/bin/ctr --address /run/k3s/containerd/containerd.sock -n k8s.io run --rm -t --gpus 0 harbor.zerchin.xyz/nvidia/cuda:11.4.3-runtime-ubuntu20.04 nvidia-test nvidia-smi